Relevance Engineering is a relatively new concept but companies such as Flax and our partners Open Source Connections have been carrying out relevance engineering for many years. So what is a relevance engineer and what do they do? In this series of blog posts I'll try to explain what I see as aContinue reading

Category Archives: Technical

Highlights of Search, Store, Scale & Stream – Berlin Buzzwords 2018

I spent last week in a sunny Berlin for the Berlin Buzzwords event (and subsequently MICES 2018, of which more later). This was my first visit to Buzzwords which was held in an arts & culture complex in an old brewery north of the city centre. The event was larger than I was expecting at around 550 people with three main tracks of talks. Although due to so...Continue reading

London Lucene/Solr Meetup – Java 9 & 1 Beeelion Documents with Alfresco

This time Pivotal were our kind hosts for the London Lucene/Solr Meetup, providing a range of goodies including some frankly enormous pizzas - thanks Costas and colleagues, we couldn't have done it without you! Our first talk was from Uwe Schindler, Lucene committer, who started with...Continue reading

Finding the Bad Actor: Custom scoring & forensic name matching with Elasticsearch

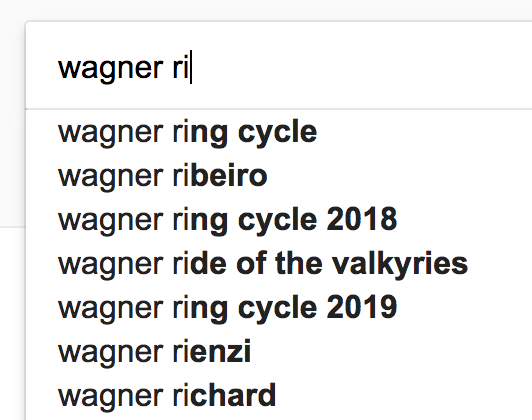

A search-based suggester for Elasticsearch with security filters

Both Solr and Elasticsearch include suggester components, which can be used to provide search engine users with suggested completions of queries as they type:

Query autocomplete has become an expected part of the search experience. Its benefits to the user include les...Continue reading

Query autocomplete has become an expected part of the search experience. Its benefits to the user include les...Continue reading

Worth the wait – Apache Kafka hits 1.0 release

We've known about Apache Kafka for several years now - we first encountered it when we developed a prototype streaming Boolean search engine for media monitoring with our own library Luwak. Kafka is a distributed streaming platform with some simple but powerful concepts - everything it deals with is a stream ...Continue reading

Better performance with the Logstash DNS filter

We've been working on a project for a customer which uses Logstash to read messages from Kafka and write them to Elasticsearch. It also parses the messages into fields, and depending on the content type does DNS lookups (both forward and reverse.) While performance testing I noticed that adding caching to the Logstash DNS filter actually reduced performance, contrary to expectations. With four filter worker threads, and the following configuration:

dns {

resolve => [ ...Continue reading

Elasticsearch, Kibana and duplicate keys in JSON

JSON has been the lingua franca of data exchange for many years. It's human-readable, lightweight and widely supported. However, the JSON spec does not define what parsers should do when they encounter a duplicate key in an object, e.g.:

{

"foo": "spam",

"foo": "eggs",

...

}

Implementations are free to interpret this how they like. When different systems have different interpretations this can cause problems.

We recently encounter...Continue reading

London Lucene/Solr Meetup: Query Pre-processing & SQL with Solr

Bloomberg kindly hosted the London Lucene/Solr Meetup last night and we were lucky enough to have two excellent speakers for the thirty or so attendees. Kriegler kicked off with a talk about the

Release 1.0 of Marple, a Lucene index detective

Back in October at our London Lucene Hackday Flax's Alan Woodward started to write Marple, a new open source tool for inspecting Lucene indexes. Since then we have made nearly 240 commits to the Marple GitHub repository, and are now happy to announce its first release.Continue reading