For most applications, Xapian/Flax’s search performance will be excellent to acceptable on a single machine of reasonable spec (see here for a discussion of CPU and RAM requirements). However, if the document corpus is unusually large – more than about 20 million items – then one server may not be enough for acceptable speed. Xapian provides a mechanism called remote backends which lets the load be shared over several machines, and thus increases the performance. Using this technique, scalability is effectively limitless (hardware budget allowing!) It is sometimes known as sharding.

To illustrate, let’s take a hypothetical news archive as our example. This collects news stories and blog posts from a wide range of sources, adds them to a Xapian index, and allows users to search the archive. For the sake of argument, we’ll say it accumulates about 20 million items per month, and that it started on December 2008. Users can search the story text, and optionally restrict the search to a date range, news source etc.

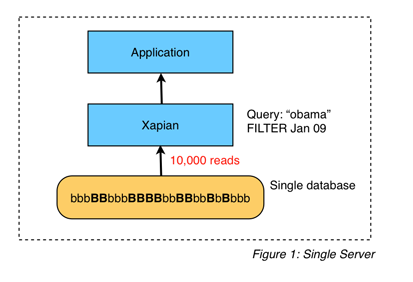

Ignoring the fine details, this is what data flow would look like on a single machine:

The current user is searching for “obama” in the date range 1-31 January 2009. Disk blocks which are relevant to this search are shown as “B”, while irrelevant blocks are shown as “b” (only a tiny sample of blocks is illustrated).

Again, for the sake of argument, let’s say this search has to read 10,000 blocks in order to retrieve the result set, taking a few seconds. This is unacceptably slow, so the archive administrators decide to distribute the search over multiple machines, using the Xapian remote backend. They use the documentation here to set up three search servers (to begin with), and put data for December 2008 on the first, Jaunary 2009 on the second, and February 2009 on the third. This seems like a good plan, as it will be easy to add a new machine each month, and start indexing to a new database.

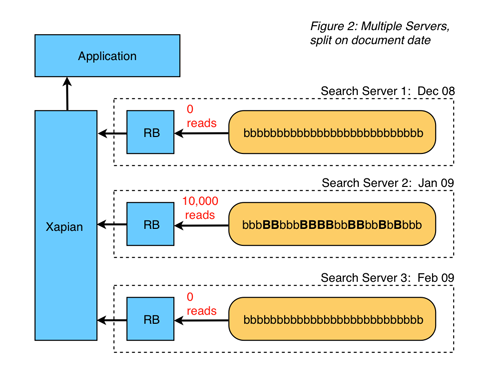

However, this way of partitioning the data is far from optimal, and in the case of the query mentioned above will not provide any performance gain at all. We can see why in the diagram below (RB boxes are Xapian remote backend servers):

Remember that the user was searching for “obama” in the date range 1-31 January 2009. Since Server 2 contains all the data for this month, and the other servers contain none, this means Server 2 has to do all the work – 10,000 disk reads as before. The end result is that the search is just as slow, and Servers 1 and 3 are idle for this query.

This sort of problem is likely to occur for any partitioning function which is not orthogonal (completely unrelated) to any variable which a user may use in a query. Say instead that the data is partitioned on news source name (Reuters, CNBC, BBC etc). A user may want to search in just one or two sources, in which case the load will again be unevenly distributed over the servers.

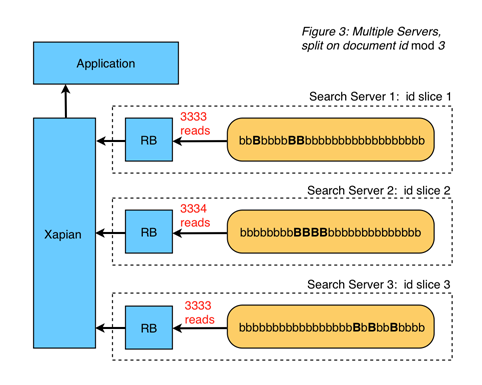

How then to partition the documents? One approach is to assign each a unique numerical ID (if not already assigned), divide this by the number of search servers, and take the remainder (mod function). If the remainder is 0, assign this document to the first server; if 1, to the second, and so on. This is shown in the diagram below:

Now, each server has an approximately equal number of blocks relevant to the query. Each server will therefore complete the query in a third of the time, and since this is in parallel, the overall search will be three times faster.

Any other orthogonal partitioning function would also be suitable, such as one based on a digest of the document content. However, a numerical ID is often the simplest. One problem with this partitioning style is that adding new machines is not such a straightforward procedure, and therefore it is simplest if the number of search nodes is decided at the beginning. Having said that, it is simple enough to repartition the databases if necessary.

We plan to make all of this automatic in a future release of Flax. In the meantime, don’t hesitate to get in touch with us if you have any questions about this or any other search topic.

I would disagree with “not provide any performance gain at all” for Figure 2 model – the search will run on a databases of 1/3rd size of original, so it will be faster.

What I do not understand in both (figure 2 and figure 3) models is when I want say first 10 results and I am getting 10 results from each server – how can I compare the relevance of results returned from each server and sort them by relevance?

“the search will run on a databases of 1/3rd size of original, so it will be faster.”

That’s mostly incorrect. The search time is proportional not to the size of the database, but to the number of blocks read. In this case, the number of block reads will be the same. So the search time will be just as long. Think of it this way: reading the first 1000 bytes of a 10GB flat file is much faster than reading the whole file.

Regarding the relevance issue, in Xapian, full statistics are exchanged by the remote protocol so the ranking will be exactly the same as for as for a single database. This is not the case in SOLR, and so you have to be careful that each shard is “balanced” in terms of similar statistics, otherwise the final ranking will be skewed.

– Tom